(Peer-Reviewed) An acceleration strategy for randomize-then-optimize sampling via deep neural networks

Liang Yan 闫亮 ¹, Tao Zhou 周涛 ²

¹ School of Mathematics, Southeast University, Nanjing Center for Applied Mathematics, Nanjing, 211135, China

中国 南京 东南大学数学学院南京应用数学中心

² LSEC, Institute of Computational Mathematics and Scientific/Engineering Computing, Academy of Mathematics and Systems Science, Chinese Academy of Sciences, Beijing 100190, China

中国 北京 中国科学院 计算数学与科学工程计算研究所 科学与工程计算国家重点实验室

Abstract

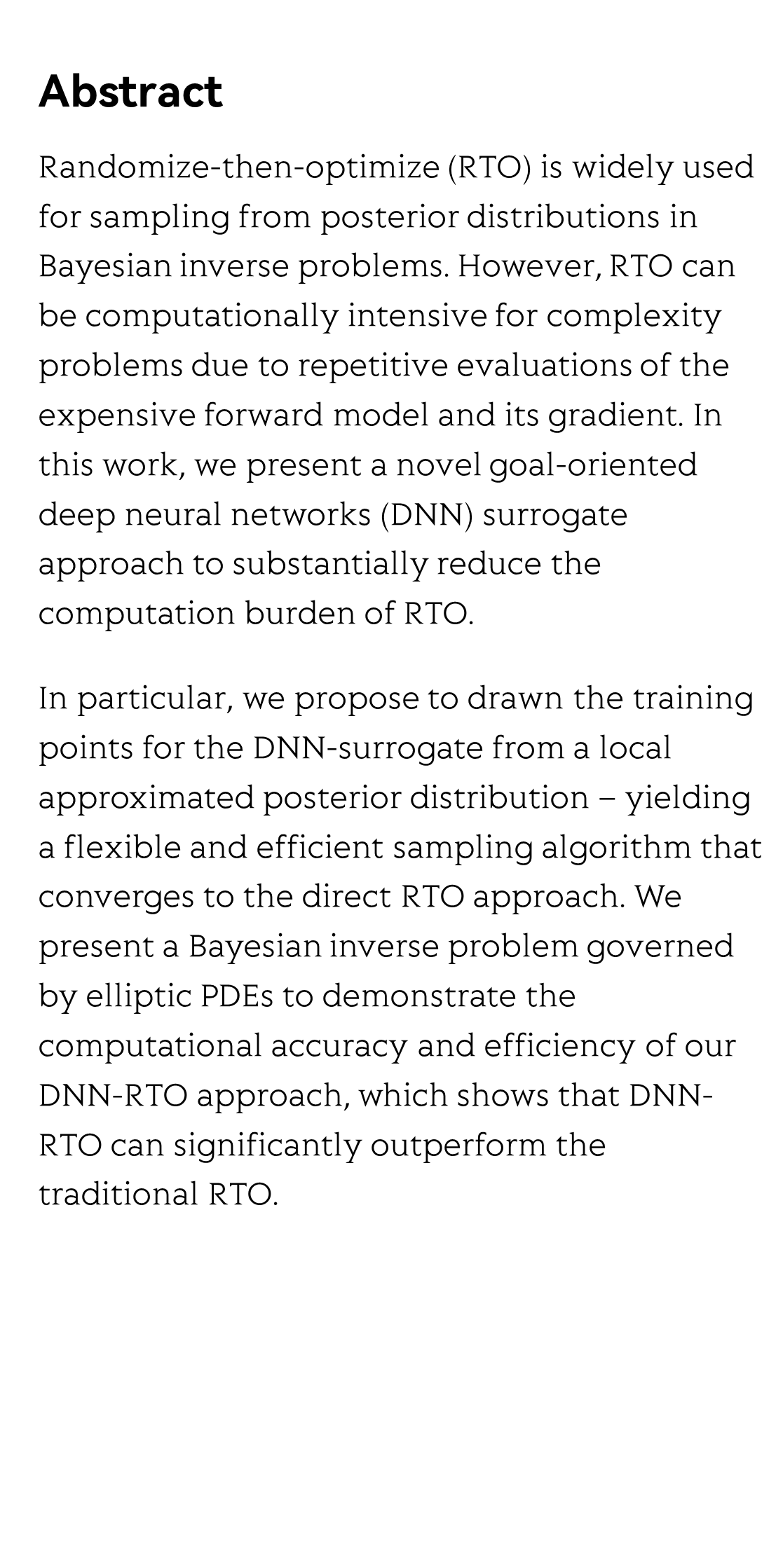

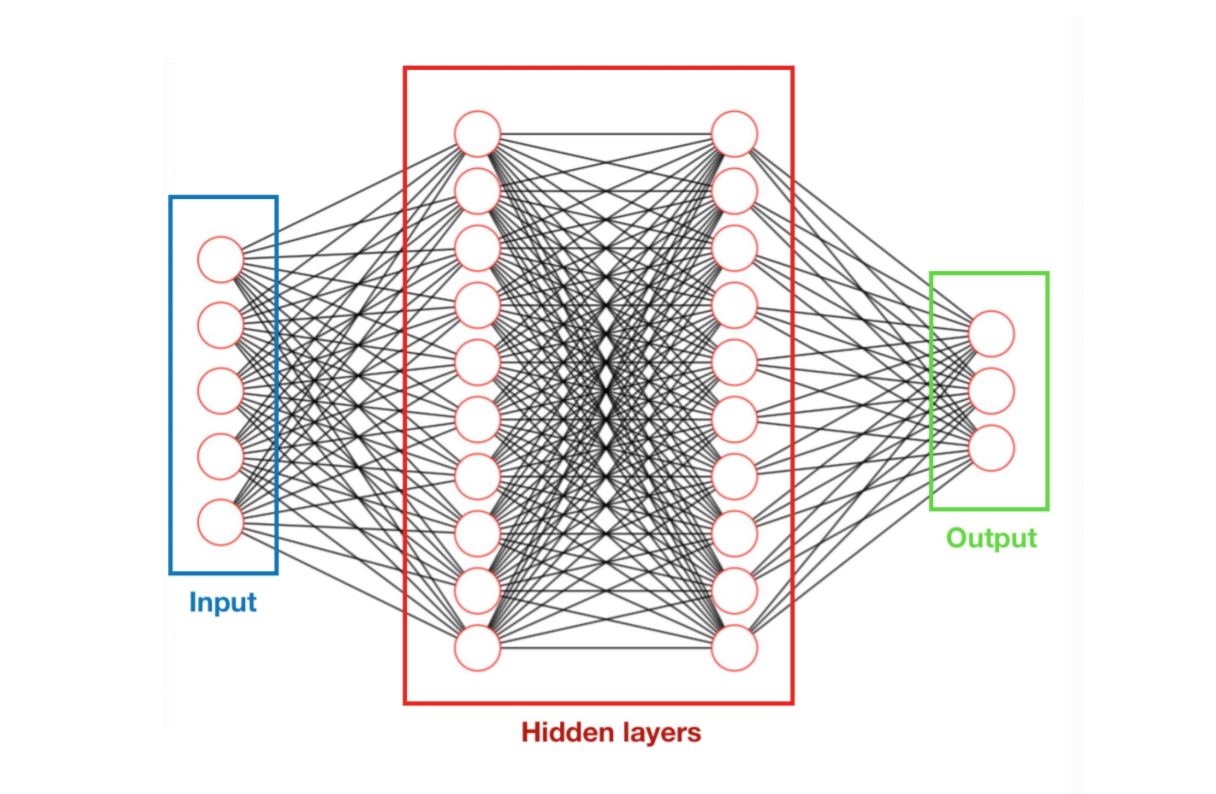

Randomize-then-optimize (RTO) is widely used for sampling from posterior distributions in Bayesian inverse problems. However, RTO can be computationally intensive for complexity problems due to repetitive evaluations of the expensive forward model and its gradient. In this work, we present a novel goal-oriented deep neural networks (DNN) surrogate approach to substantially reduce the computation burden of RTO.

In particular, we propose to drawn the training points for the DNN-surrogate from a local approximated posterior distribution – yielding a flexible and efficient sampling algorithm that converges to the direct RTO approach. We present a Bayesian inverse problem governed by elliptic PDEs to demonstrate the computational accuracy and efficiency of our DNN-RTO approach, which shows that DNN-RTO can significantly outperform the traditional RTO.

A review on optical torques: from engineered light fields to objects

Tao He, Jingyao Zhang, Din Ping Tsai, Junxiao Zhou, Haiyang Huang, Weicheng Yi, Zeyong Wei Yan Zu, Qinghua Song, Zhanshan Wang, Cheng-Wei Qiu, Yuzhi Shi, Xinbin Cheng

Opto-Electronic Science

2025-11-25