(Preprint) A Single Example Can Improve Zero-Shot Data Generation

Pavel Burnyshev ¹, Valentin Malykh ¹ ², Andrey Bout ¹, Ekaterina Artemova ¹ ³, Irina Piontkovskaya ¹

¹ Huawei Noah's Ark Lab, Moscow, Russia

² Kazan Federal University, Kazan, Russia

³ HSE University, Moscow, Russia

arXiv, 2021-08-16

Abstract

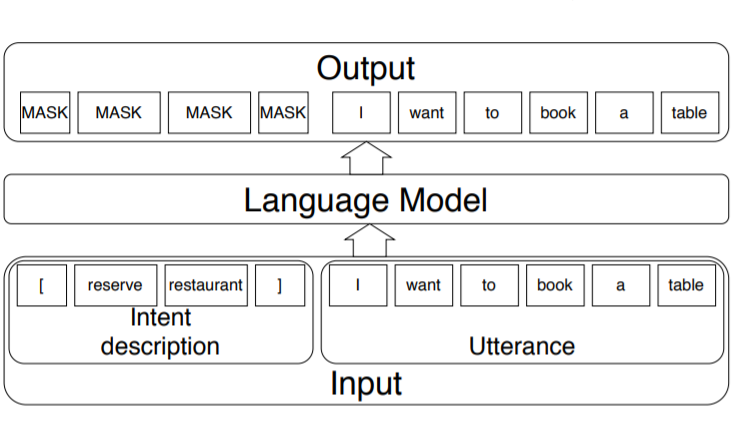

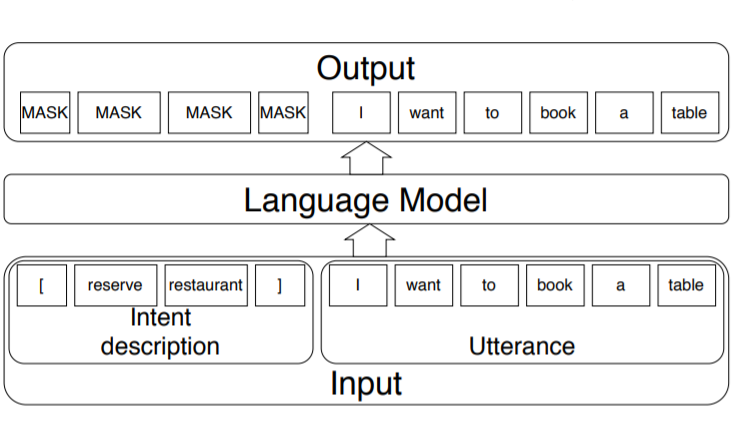

Sub-tasks of intent classification, such as robustness to distribution shift, adaptation to specific user groups and personalization, out-of-domain detection, require extensive and flexible datasets for experiments and evaluation. As collecting such datasets is time- and labor-consuming, we propose to use text generation methods to gather datasets. The generator should be trained to generate utterances that belong to the given intent.

We explore two approaches to generating task-oriented utterances. In the zero-shot approach, the model is trained to generate utterances from seen intents and is further used to generate utterances for intents unseen during training. In the one-shot approach, the model is presented with a single utterance from a test intent. We perform a thorough automatic, and human evaluation of the dataset generated utilizing two proposed approaches. Our results reveal that the attributes of the generated data are close to original test sets, collected via crowd-sourcing.

Separation and identification of mixed signal for distributed acoustic sensor using deep learning

Huaxin Gu, Jingming Zhang, Xingwei Chen, Feihong Yu, Deyu Xu, Shuaiqi Liu, Weihao Lin, Xiaobing Shi, Zixing Huang, Xiongji Yang, Qingchang Hu, Liyang Shao

Opto-Electronic Advances

2025-11-25

A review on optical torques: from engineered light fields to objects

Tao He, Jingyao Zhang, Din Ping Tsai, Junxiao Zhou, Haiyang Huang, Weicheng Yi, Zeyong Wei Yan Zu, Qinghua Song, Zhanshan Wang, Cheng-Wei Qiu, Yuzhi Shi, Xinbin Cheng

Opto-Electronic Science

2025-11-25