(Preprint) CLSEBERT: Contrastive Learning for Syntax Enhanced Code Pre-Trained Model

Xin Wang ¹, Yasheng Wang ², Pingyi Zhou ², Meng Xiao ², Yadao Wang ², Li Li ³, Xiao Liu ⁴, Hao Wu 武浩 ⁵, Jin Liu 刘进 ¹, Xin Jiang ²

¹ School of Computer Science, Wuhan University 武汉大学 计算机学院

² Noah's Ark Lab, Huawei 华为 诺亚方舟实验室

³ Faculty of Information Technology, Monash University

⁴ School of Information Technology, Deakin University

⁵ School of Information Science and Engineering, Yunnan University 云南大学 信息学院

arXiv, 2021-08-10

Abstract

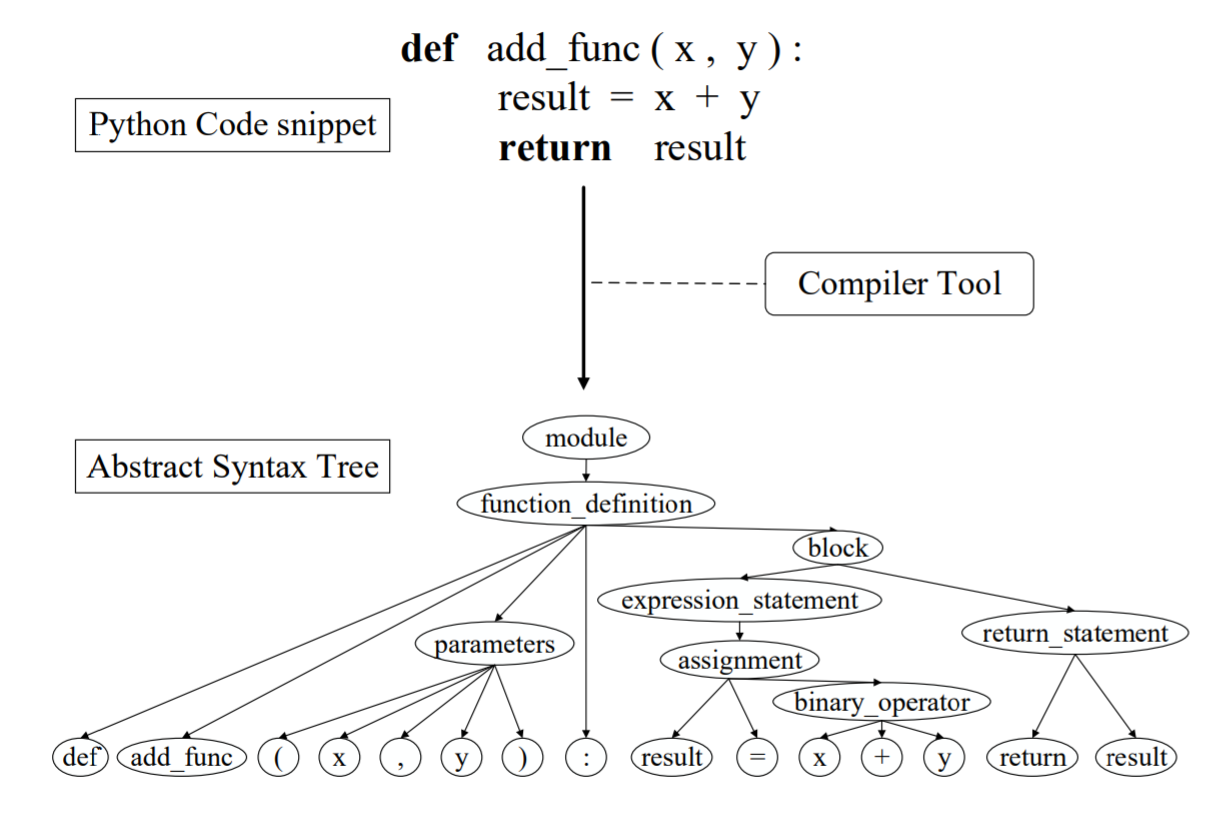

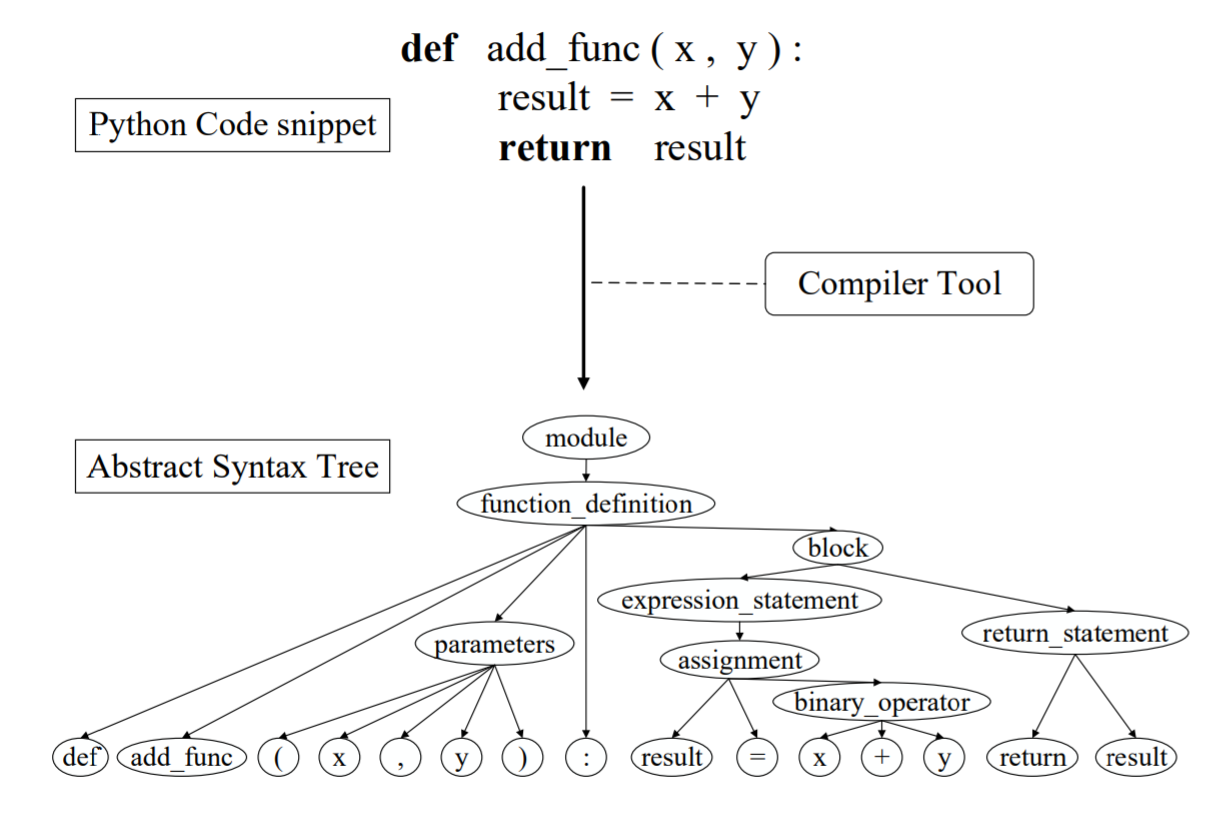

Pre-trained models for programming languages have proven their significant values in various code-related tasks, such as code search, code clone detection, and code translation. Currently, most pre-trained models treat a code snippet as a sequence of tokens or only focus on the data flow between code identifiers.

However, rich code syntax and hierarchy are ignored which can provide important structure information and semantic rules of codes to help enhance code representations. In addition, although the BERT-based code pre-trained models achieve high performance on many downstream tasks, the native derived sequence representations of BERT are proven to be of low-quality, it performs poorly on code matching and similarity tasks.

To address these problems, we propose CLSEBERT, a Constrastive Learning Framework for Syntax Enhanced Code Pre-Trained Model, to deal with various code intelligence tasks. In the pre-training stage, we consider the code syntax and hierarchy contained in the Abstract Syntax Tree (AST) and leverage the constrastive learning to learn noise-invariant code representations. Besides the masked language modeling (MLM), we also introduce two novel pre-training objectives. One is to predict the edges between nodes in the abstract syntax tree, and the other is to predict the types of code tokens. Through extensive experiments on four code intelligence tasks, we successfully show the effectiveness of our proposed model.

A review on optical torques: from engineered light fields to objects

Tao He, Jingyao Zhang, Din Ping Tsai, Junxiao Zhou, Haiyang Huang, Weicheng Yi, Zeyong Wei Yan Zu, Qinghua Song, Zhanshan Wang, Cheng-Wei Qiu, Yuzhi Shi, Xinbin Cheng

Opto-Electronic Science

2025-11-25