(Preprint) Towards Understanding the Generative Capability of Adversarially Robust Classifiers

Yao Zhu ¹, Jiacheng Ma ², Jiacheng Sun ², Zewei Chen ², Rongxin Jiang 蒋荣欣 ¹, Zhenguo Li ²

¹ Zhejiang University

浙江大学

² Huawei Noah’s Ark Lab

华为诺亚方舟实验室

arXiv, 2021-08-20

Abstract

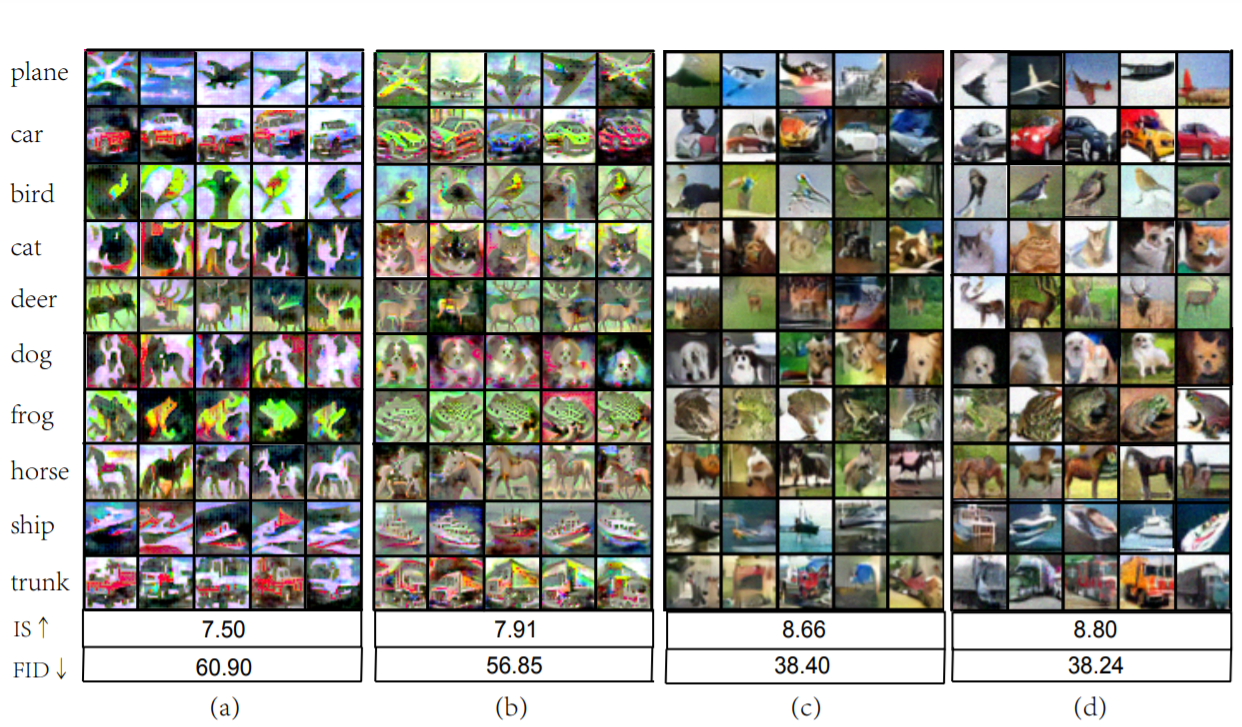

Recently, some works found an interesting phenomenon that adversarially robust classifiers can generate good images comparable to generative models. We investigate this phenomenon from an energy perspective and provide a novel explanation. We reformulate adversarial example generation, adversarial training, and image generation in terms of an energy function. We find that adversarial training contributes to obtaining an energy function that is flat and has low energy around the real data, which is the key for generative capability.

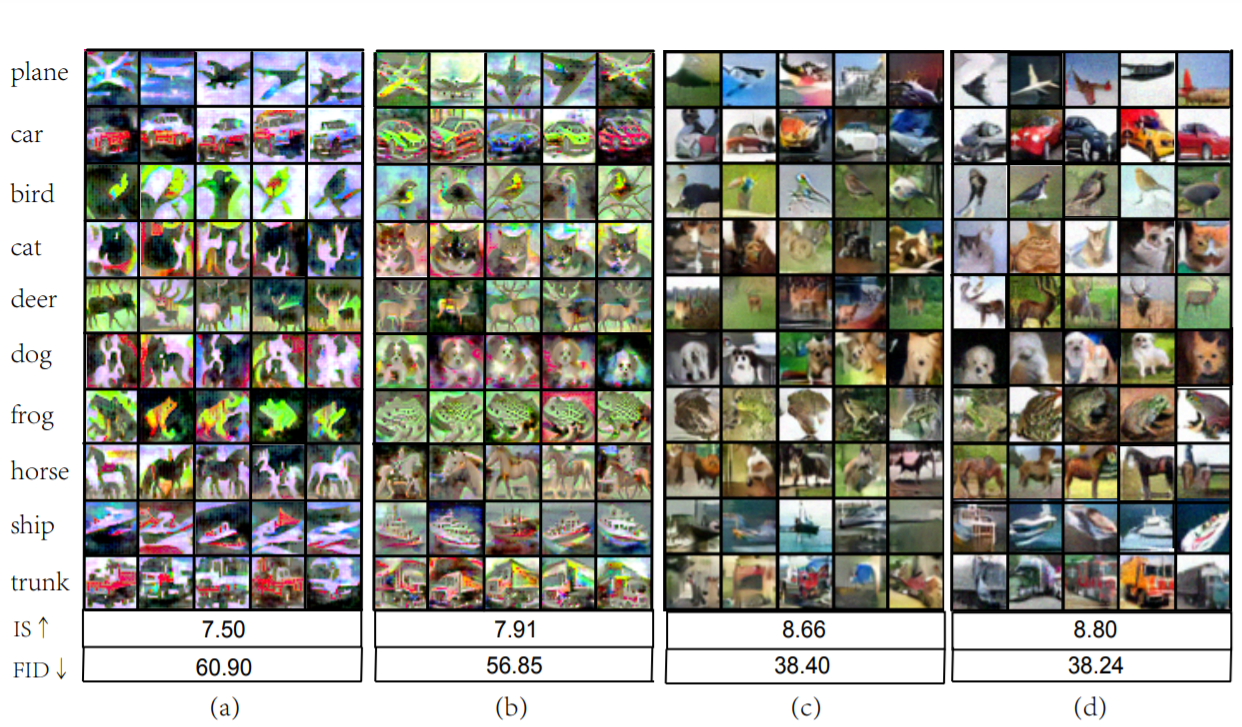

Based on our new understanding, we further propose a better adversarial training method, Joint Energy Adversarial Training (JEAT), which can generate high-quality images and achieve new state-of-the-art robustness under a wide range of attacks. The Inception Score of the images (CIFAR-10) generated by JEAT is 8.80, much better than original robust classifiers (7.50). In particular, we achieve new state-of-the-art robustness on CIFAR-10 (from 57.20% to 62.04%) and CIFAR-100 (from 30.03% to 30.18%) without extra training data.

Separation and identification of mixed signal for distributed acoustic sensor using deep learning

Huaxin Gu, Jingming Zhang, Xingwei Chen, Feihong Yu, Deyu Xu, Shuaiqi Liu, Weihao Lin, Xiaobing Shi, Zixing Huang, Xiongji Yang, Qingchang Hu, Liyang Shao

Opto-Electronic Advances

2025-11-25

A review on optical torques: from engineered light fields to objects

Tao He, Jingyao Zhang, Din Ping Tsai, Junxiao Zhou, Haiyang Huang, Weicheng Yi, Zeyong Wei Yan Zu, Qinghua Song, Zhanshan Wang, Cheng-Wei Qiu, Yuzhi Shi, Xinbin Cheng

Opto-Electronic Science

2025-11-25