(Preprint) Modeling Relevance Ranking under the Pre-training and Fine-tuning Paradigm

Lin Bo ¹, Liang Pang 庞亮 ³, Gang Wang ⁴, Jun Xu 徐君 ², XiuQiang He 何秀强 ⁴, Ji-Rong Wen 文继荣 ²

¹ School of Information, Renmin University of China, Beijing, China

中国 北京 中国人民大学信息学院

² Gaoling School of Artificial Intelligence, Renmin University of China, , Beijing, China

中国 北京 中国人民大学高瓴人工智能学院

³ Institute of Computing Technology, Chinese Academy of Sciences

中国 北京 中国科学院计算技术研究所

⁴ Huawei Noah’s Ark Lab

中国 香港 华为诺亚方舟实验室

arXiv, 2021-08-12

Abstract

Recently, pre-trained language models such as BERT have been applied to document ranking for information retrieval. These methods usually first pre-train a general language model on an unlabeled large corpus and then conduct ranking-specific fine-tuning on expert-labeled relevance datasets. Though reliminary successes have been observed in a variety of IR tasks, a lot of room still remains for further improvement.

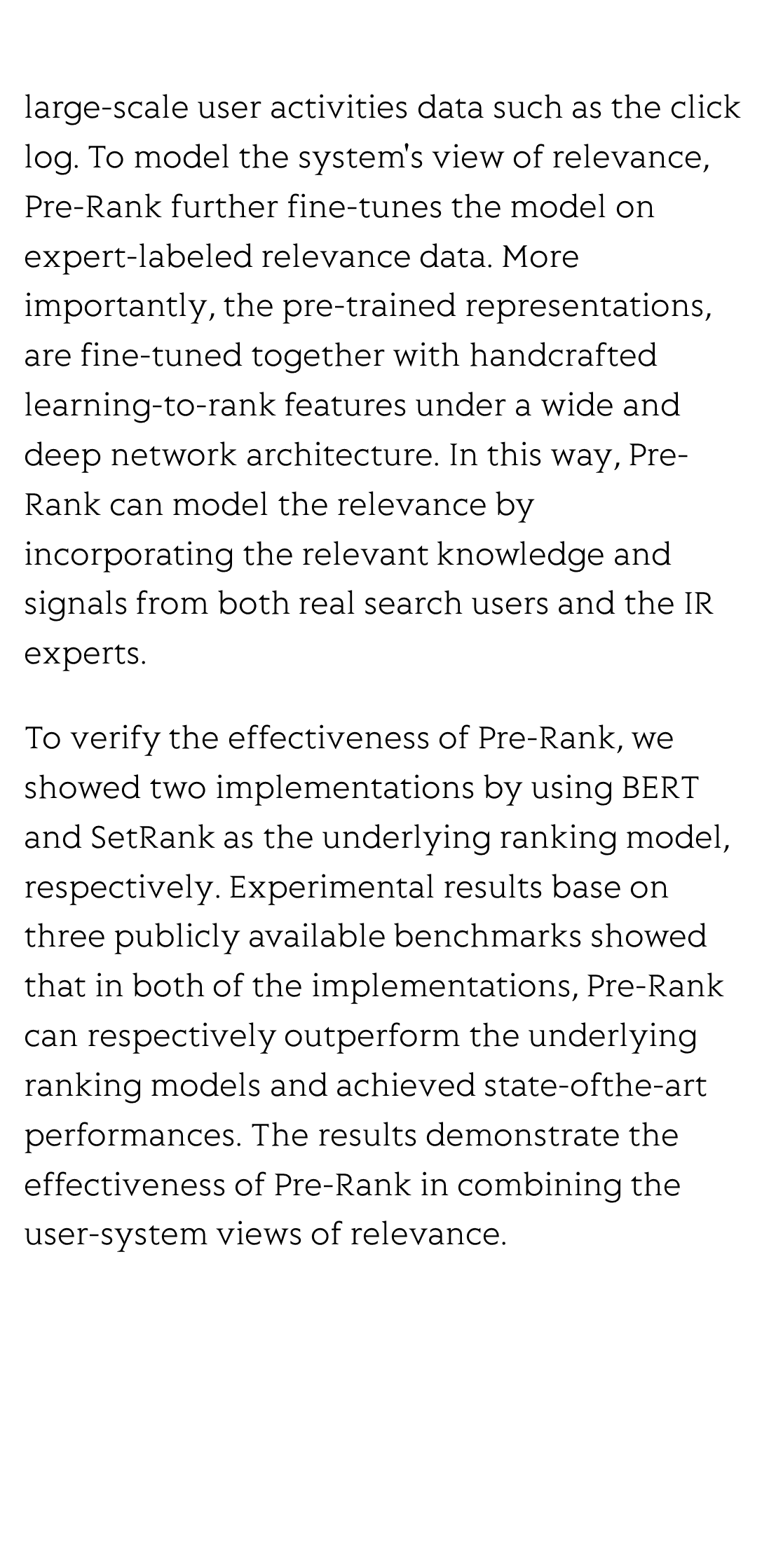

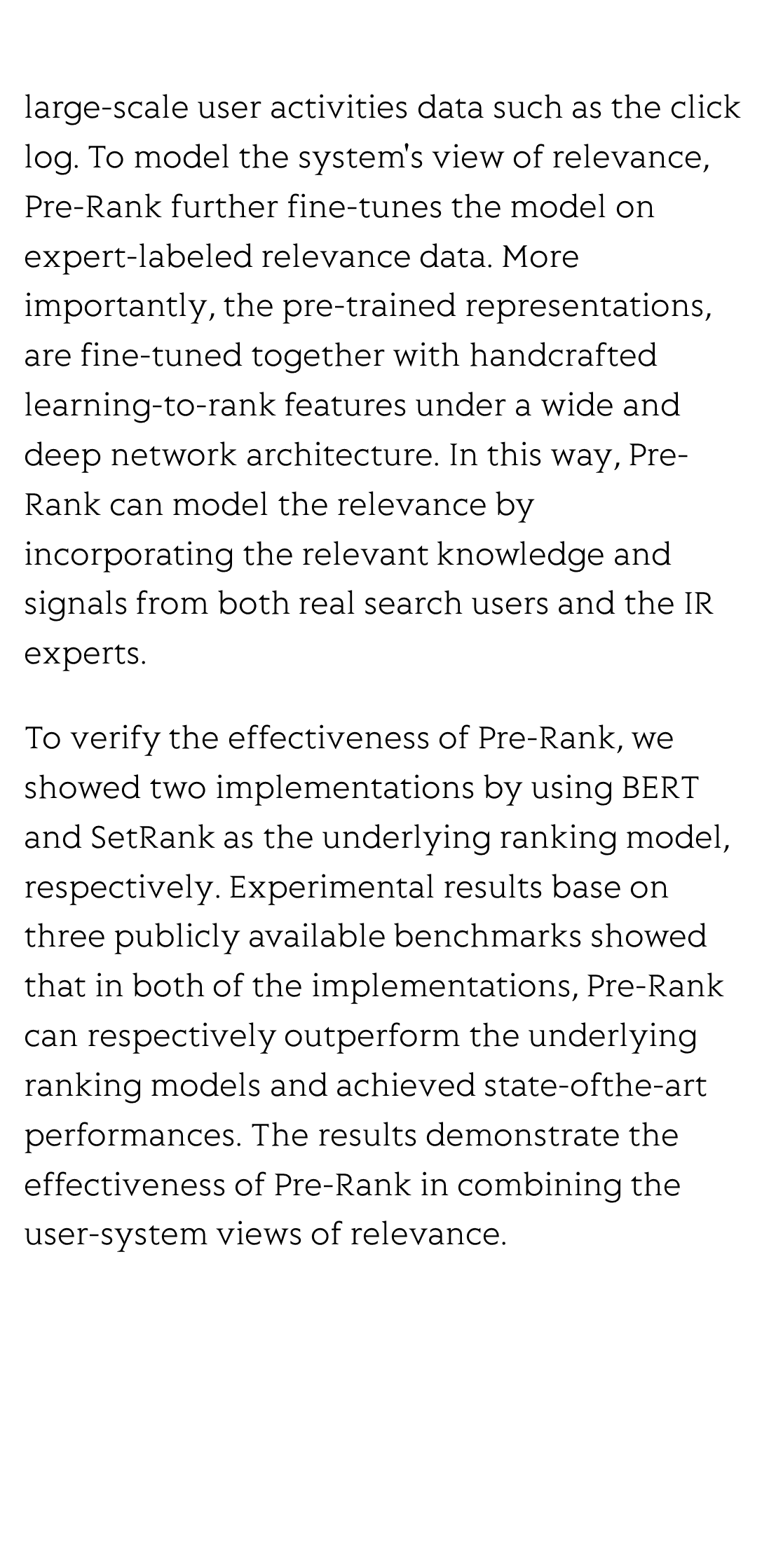

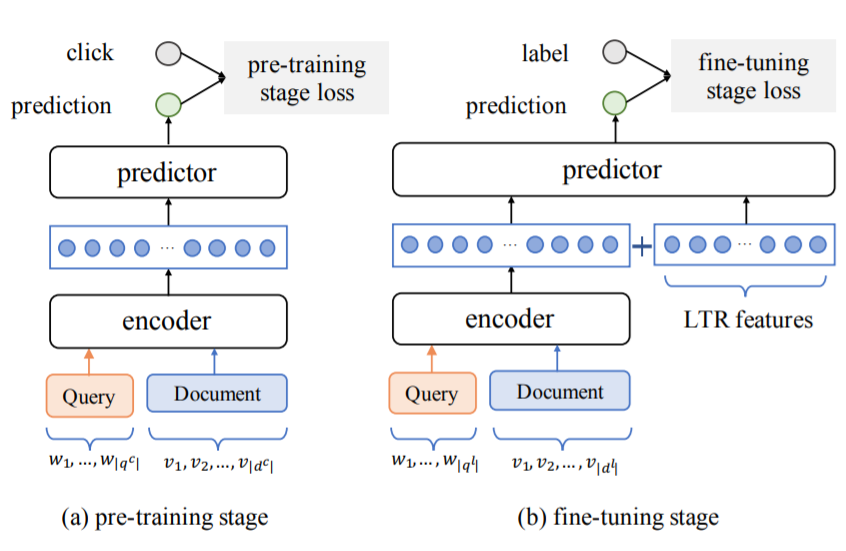

Ideally, an IR system would model relevance from a user-system dualism: the user's view and the system's view. User's view judges the relevance based on the activities of “real users” while the system's view focuses on the relevance signals from the system side, e.g., from the experts or algorithms, etc. Inspired by the user-system relevance views and the success of pre-trained language models, in this paper we propose a novel ranking framework called Pre-Rank that takes both user's view and system's view into consideration, under the pre-training and fine-tuning paradigm. Specifically, to model the user's view of relevance, Pre-Rank pre-trains the initial query-document representations based on a large-scale user activities data such as the click log. To model the system's view of relevance, Pre-Rank further fine-tunes the model on expert-labeled relevance data. More importantly, the pre-trained representations, are fine-tuned together with handcrafted learning-to-rank features under a wide and deep network architecture. In this way, Pre-Rank can model the relevance by incorporating the relevant knowledge and signals from both real search users and the IR experts.

To verify the effectiveness of Pre-Rank, we showed two implementations by using BERT and SetRank as the underlying ranking model, respectively. Experimental results base on three publicly available benchmarks showed that in both of the implementations, Pre-Rank can respectively outperform the underlying ranking models and achieved state-ofthe-art performances. The results demonstrate the effectiveness of Pre-Rank in combining the user-system views of relevance.

Separation and identification of mixed signal for distributed acoustic sensor using deep learning

Huaxin Gu, Jingming Zhang, Xingwei Chen, Feihong Yu, Deyu Xu, Shuaiqi Liu, Weihao Lin, Xiaobing Shi, Zixing Huang, Xiongji Yang, Qingchang Hu, Liyang Shao

Opto-Electronic Advances

2025-11-25

A review on optical torques: from engineered light fields to objects

Tao He, Jingyao Zhang, Din Ping Tsai, Junxiao Zhou, Haiyang Huang, Weicheng Yi, Zeyong Wei Yan Zu, Qinghua Song, Zhanshan Wang, Cheng-Wei Qiu, Yuzhi Shi, Xinbin Cheng

Opto-Electronic Science

2025-11-25